March 14, 2025 · Jimeng Sun, PhD, CEO of Keiji AI

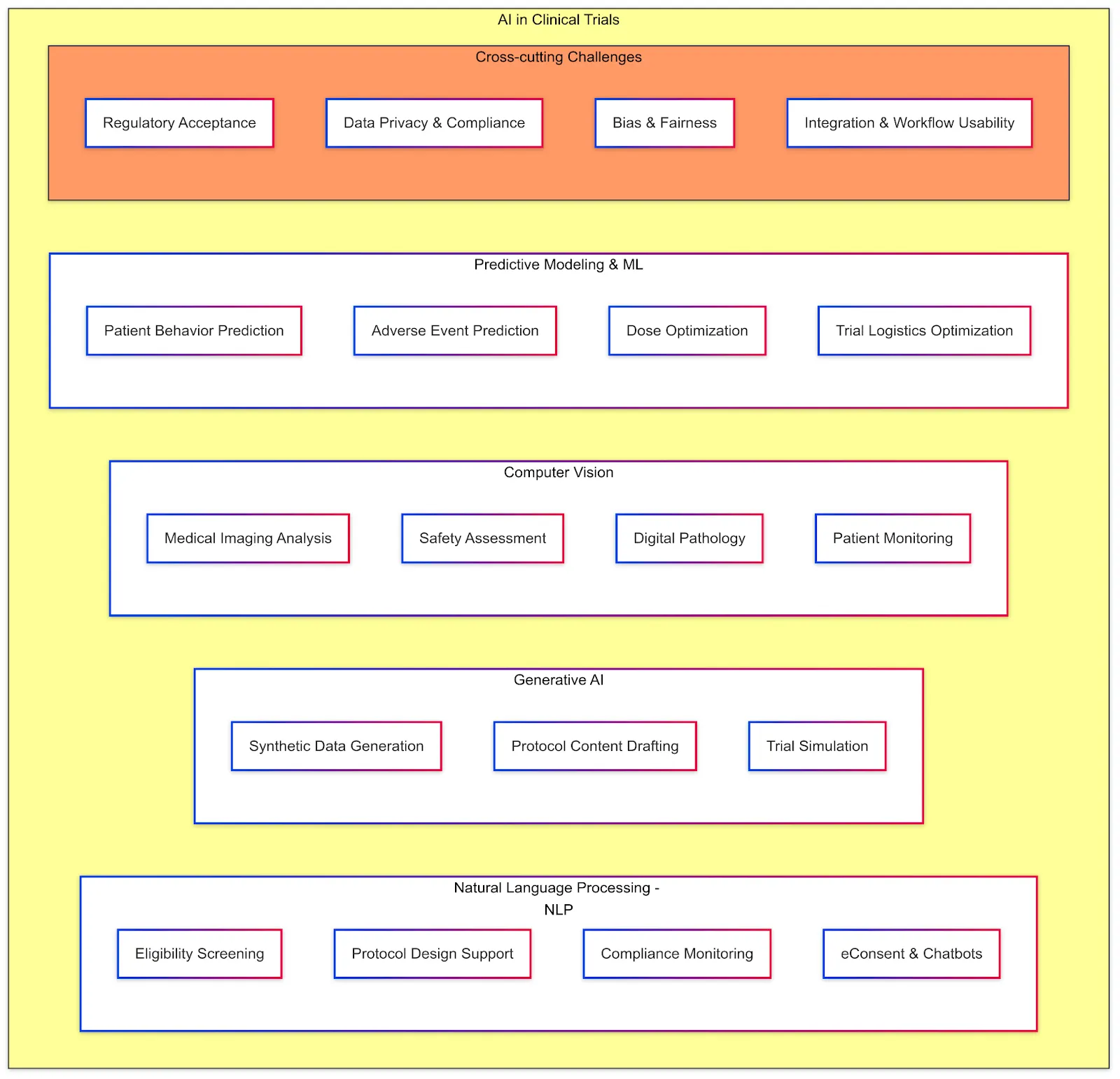

AI is increasingly being applied throughout clinical trial lifecycle. Below are key AI techniques and use cases in clinical trials, along with benefits and challenges:

Natural Language Processing (NLP)

NLP deals with analyzing and generating human language. In clinical trials, a huge amount of information is in text form — protocols, investigator brochures, medical records, eligibility criteria, patient feedback, monitoring reports, etc. NLP models can read, interpret, extract these texts and generate useful responses in both natural language forms (e.g., chatbot) or structured output (e.g., information extraction).

Applications: One major use of NLP is eligibility screening: by processing electronic health records and clinical notes, NLP models can identify patients who match a trial's inclusion/exclusion criteria much faster than manual chart review. For example, NIH researchers and Keiji AI have developed TrialGPT algorithm to match trial eligibility criteria (inclusion/exclusion criteria) with patient notes. Similarly, NLP can help protocol design by analyzing large corpora of prior protocols to suggest eligibility criteria or trial endpoints. It can also be used in compliance monitoring: Clinical trial monitors write visit reports, and NLP models can mine these to identify common issues or protocol deviations across sites. Additionally, NLP underpins eConsent comprehension tools (ensuring language is at an appropriate reading level) and can power chatbots that answer participant questions about the study by understanding their queries.

Challenges: Clinical text can be messy — full of abbreviations and jargons. NLP models must be trained on medical data to perform well. There is a risk of misinterpretation; for instance, identifying the wrong patients if the notes are ambiguous. Ensuring data privacy when using patient records for NLP is important — typically, such systems operate on de-identified data or behind hospital firewalls. Regulators will want transparency: if NLP is used to include or exclude patients, sponsors should validate its accuracy to avoid inadvertent bias (e.g., certain populations' records might be less detailed, leading the NLP to mismatch them). Despite these challenges, NLP has proven to be a game-changer in reducing manual effort — it transforms unstructured text into actionable data.

Generative AI

This refers to AI models that can create new content or simulate data. In clinical trials, generative AI is being explored in several ways.

Synthetic Data Generation: Using generative models, one can create synthetic patient data that statistically resembles real trial data without containing identifiable information. This can be useful for data sharing and algorithm training — e.g., generating a realistic dataset to develop an AI model when the actual data is confidential or limited. Some companies are working on synthetic control arms where generative models plus historical data produce a "virtual" placebo group, potentially allowing a trial to use fewer real placebo patients.

Protocol content generation: Large language models (e.g., proprietary models from OpenAI and Claude or opensource models like Llama, Qwen and Deepseek) can draft parts of a protocol or statistical analysis plan (SAP) based on input parameters. For instance, given the trial objectives, a generative model might propose an outline of the inclusion criteria or suggest appropriate endpoints, which the human team can then refine. This can speed up protocol development.

Trial Simulation: Generative AI is capable of simulating patient trajectories under various scenarios — for instance, predicting how tumor sizes may evolve over time in cancer trials. This approach essentially functions as an AI-based Monte Carlo simulation, helping trial planners understand potential outcomes. Such simulations can address data gaps, particularly in rare disease contexts with limited datasets, by generating additional realistic patient data to enhance analyses or to support machine learning model training. Additionally, generative AI can personalize educational and training materials for clinical site staff by synthesizing realistic patient conversations or voices, thus improving preparation for patient interactions.

Software and Workflow Testing: Synthetic data generated by AI models can also be employed to rigorously test clinical trial software and workflows. For example, simulating trial progression using synthetic patient data can validate and assess the reliability and consistency of trial startup initiation procedures and electronic data capture (EDC) systems. This proactive approach helps identify potential issues before real patient data collection begins, ensuring smoother trial execution.

Challenges and Considerations: The primary concerns around generative AI in clinical trials include ensuring fidelity and validity of synthetic data. Such data must be rigorously validated against real-world patterns to prevent misleading analyses or model outcomes. For AI-generated textual content like protocols, careful review is crucial to ensure accuracy, compliance with regulatory requirements, and patient safety. Additionally, there's a risk of perpetuating biases — if generative models are trained on historically biased datasets, the synthetic data generated could replicate underrepresentation of certain demographic groups. Despite these challenges, generative AI represents a promising supplementary tool to human expertise and real-world data, augmenting clinical trials rather than replacing traditional methodologies.

Computer Vision

Computer vision (CV) encompasses the use of AI to analyze images and videos, providing substantial value in clinical trials where visual data is crucial. The prominent applications include:

Medical Imaging Analysis: Many clinical trials, particularly in oncology, radiology, dermatology, and ophthalmology, rely heavily on imaging endpoints such as tumor scans, X-rays, retinal photographs, and skin lesion images. AI-driven CV algorithms can rapidly and consistently analyze these visual datasets. For instance, in oncology trials, algorithms can measure tumor volumes from MRI scans to accurately assess disease progression or treatment response, potentially surpassing human radiologists in consistency and speed.

Safety Assessments: CV algorithms can be used to detect adverse events early. Examples include analyzing liver ultrasound images for early signs of drug-induced liver injury or evaluating patient-submitted injection site photos to promptly identify adverse reactions or complications.

Digital Pathology: Trials involving biopsy samples benefit significantly from AI-powered vision analysis. CV algorithms can quantitatively analyze histopathology slides to accurately measure biomarker expressions or characterize disease morphology, enhancing precision and reproducibility in assessments.

Patient Monitoring: In neurological or movement-disorder trials, CV models analyze video recordings of patient motor function tests, objectively quantifying gait and movement patterns. This provides standardized metrics, reducing observer variability.

Ophthalmology Applications: In ophthalmology trials, CV has been successfully implemented as a secondary reader for diagnosing conditions like diabetic retinopathy. For example, Google's deep learning models for retinal image analysis demonstrated performance on par with human experts, showcasing CV's effectiveness in clinical contexts.

Advantages: AI-based CV systems handle large image datasets efficiently and consistently, potentially uncovering subtle patterns overlooked by human observers — such as micro-patterns predictive of disease progression. These systems reduce variability due to human fatigue or inconsistent judgment, ensuring consistent endpoint evaluations and facilitating earlier detection of treatment effects through novel imaging biomarkers.

Challenges and Considerations: Implementing computer vision in clinical trials requires addressing several critical issues:

- Validation and Regulatory Approval: AI algorithms used for primary endpoints must be rigorously validated against expert assessments. They often require regulatory approval as medical device software.

- Explainability: There is an ongoing demand for AI transparency. Investigators and regulators frequently request clear explanations of AI decisions — such as highlighting specific image areas contributing to the AI's diagnostic conclusions — to ensure clinical relevance and trust.

- Data Quality: AI performance is highly sensitive to image quality. Ensuring standardized image acquisition protocols (e.g., consistent CT or MRI scanner settings across sites) is essential to maintain reliable AI outputs.

- Infrastructure Requirements: Effective deployment of CV algorithms demands robust digital infrastructure to centralize image collection and process data efficiently, necessitating careful planning and coordination.

Despite these challenges, the potential of computer vision in clinical trials to streamline processes, enhance accuracy, and expedite insights remains highly promising, supporting its growing adoption.

Predictive Modeling and Machine Learning

Predictive modeling and machine learning (ML) encompass a broad set of approaches applying AI algorithms to structured clinical trial data, enabling predictions, classifications, and enhanced decision-making processes. Key applications include:

Site Selection: ML models support site selection by predicting site performance based on historical trial data, site-specific metrics, and demographic information. Predictive analytics can identify high-performing sites likely to meet recruitment targets and maintain data quality, thus optimizing trial resource allocation and increasing trial success likelihood.

Patient Behavior Prediction: ML models can forecast participant behaviors, such as identifying patients at risk of dropping out. By analyzing historical trial data — including demographics, site characteristics, visit adherence, and engagement metrics — these algorithms can proactively highlight participants who may require additional engagement efforts to maintain trial participation.

Safety Risk Prediction: Models can predict adverse events or patient safety risks by analyzing baseline biomarkers, vital signs, and patient history data. Identifying participants likely to experience severe side effects enables more targeted and intensive monitoring, enhancing patient safety.

Dose Optimization: Predictive algorithms can dynamically optimize dosing during trials, particularly in adaptive trial designs common in Phase I oncology studies. ML models leverage real-time data to guide dose escalation decisions, improving safety and treatment efficacy outcomes.

Adaptive Trial Design: ML-driven predictive models continually analyze incoming trial data, allowing adjustments such as re-estimating sample sizes, adapting randomization, or deciding early trial termination due to efficacy or futility. This dynamic approach enhances trial efficiency and resource allocation.

Operational Optimization: Predictive modeling assists in optimizing logistical aspects, including forecasting enrollment rates, identifying optimal recruitment channels, and anticipating potential delays. Utilizing historical trial data, these models facilitate proactive management, enabling timely interventions and efficient trial operations.

Real-world Example: Some clinical trial management dashboards now incorporate risk-based monitoring (RBM) systems driven by ML. These platforms use site performance indicators — such as data entry delays, query rates, and protocol deviations — to predict and prioritize sites needing closer oversight, enhancing trial quality and compliance.

Challenges and Considerations: While predictive modeling offers significant benefits, several critical challenges must be managed:

- Accuracy and Reliability: Predictive models inherently produce probabilistic outcomes, risking false positives and false negatives. Incorrect predictions can lead to misallocated resources or missed intervention opportunities. Consequently, ML predictions should be used as decision support rather than definitive guides.

- Regulatory Compliance: Utilizing predictive models in trial operations involves regulatory considerations. Regulatory authorities, including the FDA's Complex Innovative Designs initiative, encourage innovative trial methodologies but demand rigorous validation, transparency, and thorough documentation to demonstrate statistical integrity and ensure patient protection.

- Model Explainability: Transparency in predictive models is essential, especially when these models influence critical trial decisions like early termination. Interpretable statistical models or advanced ML methods paired with explanatory tools are preferred to enhance understanding and regulatory acceptance.

Overall, predictive modeling significantly enhances clinical trial capabilities, provided these methodological, ethical, and regulatory challenges are proactively addressed.

Challenges and Considerations Across AI Applications

Regulatory acceptance of AI in clinical trials is still evolving. Agencies like FDA have begun to issue guidance on AI in medical devices and have hosted discussions on AI in trial design. A clear challenge is validation: any AI that influences trial data or participant safety must be thoroughly tested to ensure it meets the same standards as traditional methods. For example, if AI is used to evaluate an endpoint, one must demonstrate it is as good as the current gold standard measurements.

Data privacy is another overarching concern. Trials operate under strict privacy laws; introducing AI often means introducing large datasets (sometimes external data) and new data flows. Sponsors must ensure compliance with GDPR, HIPAA, etc., and often need to de-identify data before AI model development.

Bias is a major topic: clinical trial data itself may not be representative of the real-world patient population (since trials historically have under-enrollment of women, minorities, older patients). If AI models are trained on these biased data, they could perpetuate or even worsen disparities. It's crucial to deliberately include diverse data in training AI and to monitor AI outputs for bias. On the flip side, AI can be part of the solution to trial diversity — by identifying and engaging underrepresented groups (for instance, using social media data to reach minority communities for recruitment).

Another challenge is integration: AI tools need to fit into the existing clinical trial workflow. Investigators and site staff are already burdened with many systems; an AI tool will gain traction only if it's user-friendly and integrates with, say, the CTMS or EDC they already use (no one wants yet another login and interface). Companies are thus embedding AI into familiar software — e.g., adding an "AI assistant" in the EDC that suggests queries or a widget in the trial management portal that predicts enrollment.

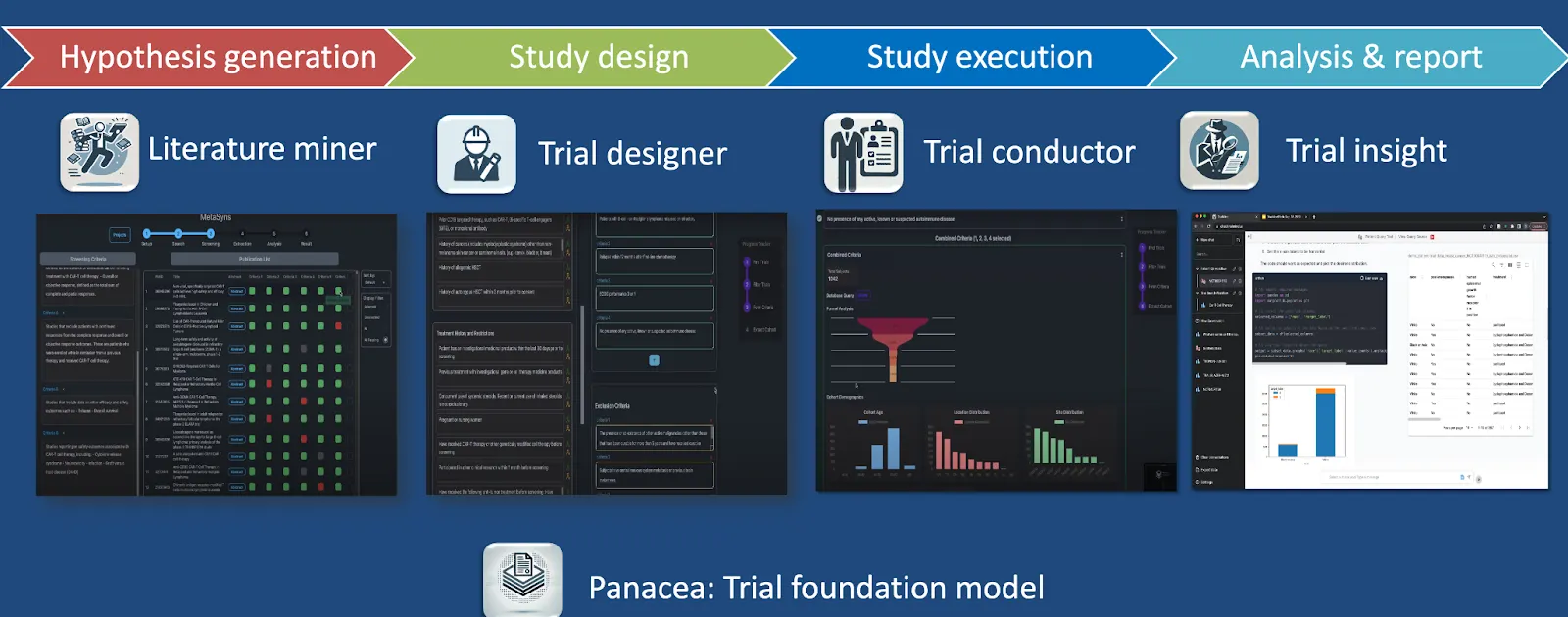

AI-Driven Case Studies and Recent Advancements (Keiji AI)

Keiji AI leverages specialized AI agents and generative AI models across various aspects of clinical trials, significantly enhancing efficiency, data quality, and innovation throughout the trial lifecycle. Below are specific areas and advancements driven by Keiji AI:

Clinical Trial Optimization with TrialMind Platform

The TrialMind platform is Keiji AI's generative AI clinical trial optimization tool, designed to streamline processes from hypothesis generation through study execution and reporting. It is powered by the Panacea foundation model, trained on extensive clinical trial data and literature, enabling robust hypothesis generation, efficient trial design, and rapid study execution.

Literature Mining

Keiji AI's LEADS platform integrates human expertise and AI-driven literature mining, enabling significant time savings in clinical evidence synthesis. It outperforms state-of-the-art models like GPT-4o, achieving a 23% time saving in screening literature tasks by combining human expertise with AI-driven methods.

Trial Design and Eligibility Criteria Generation

Keiji AI developed AutoTrial, the first large language model specialized explicitly for clinical trial design, trained on over 400,000 clinical trial records and 300,000 instructional data points. This tool facilitates the rapid generation of accurate and regulatory-compliant eligibility criteria.

Patient Recruitment and Matching

The TrialGPT tool by Keiji AI significantly accelerates patient recruitment by using generative AI to match patients to clinical trials effectively. It achieves an 87% expert-level assessment accuracy and saves approximately 43% of the time traditionally needed for matching patients to suitable trials.

Synthetic Data Generation

Keiji AI employs generative AI to create realistic synthetic patient data, maintaining high covariate correlation across over 10,000 medical variables. This synthetic data significantly enhances downstream clinical modeling and analysis performance.

Digital Twins and Patient Simulation

Keiji AI's FairPlay framework addresses bias in electronic health record (EHR) databases through counterfactual digital twin simulation. This approach creates balanced datasets by generatively upsampling underrepresented groups, improving classification accuracy and fairness in clinical outcomes.

Data Science Assistance

Keiji AI provides an AI-driven Data Science Assistant for biomedical and statistical analyses, incorporating multiple large language models and prompting methods. The tool offers significant improvements in code accuracy, reuse, and enables both autonomous agents and human-AI collaborative approaches to biomedical data analysis.

High-impact Research

Keiji AI has contributed substantially to AI-driven clinical trial methodologies, publishing impactful studies on patient trial matching, synthetic patient record generation, clinical trial outcome predictions, and site selection. Notable publications include innovations such as Trial2Vec, Hint, and SPOT models, demonstrating significant advancements in AI methodologies applied to clinical trial outcomes, patient matching, and predictive modeling.

Through these advancements, Keiji AI is reshaping the clinical trial landscape by increasing efficiency, accuracy, and fairness in clinical research processes.