December 1, 2025 · Venugopal Thati, Chief Architect of Keiji AI

Introduction: The "Day Two" Problem

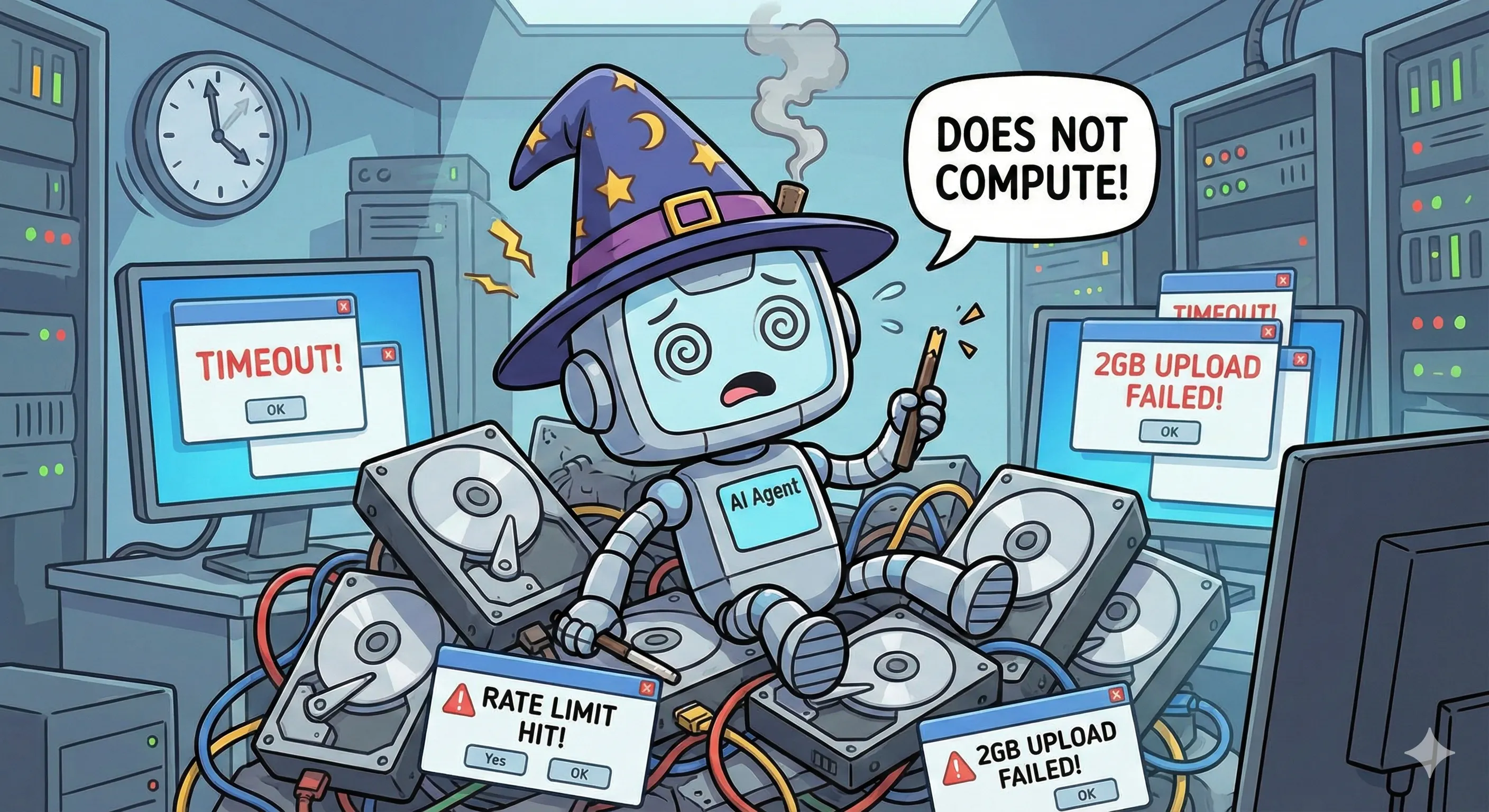

Anyone can build an AI agent in a weekend. With a few API calls and a basic prompt, you can get an LLM to answer questions or write code. It feels magical.

But then "Day Two" happens. A customer uploads a 2GB dataset, or your agent needs to work with terabytes of customer data. The internet flickers, or the LLM generates code that has syntax errors or requires packages you don't have in your environment. The API hits a rate limit, and suddenly, your magical demo is a reliability nightmare.

Building a production-grade agent isn't an AI challenge; it's a distributed systems challenge. To move beyond simple synchronous script execution, engineers must design platforms that are reliable, secure, and compliant. Here are five architectural pillars for building enterprise-ready agents.

1. The Architecture of Trust: Decoupling Control and Data

The biggest fear enterprise customers have is data leakage, yet standard agent architectures often blindly send data to the LLM to process. A secure architecture requires a different approach.

Control Plane vs. Data Plane: Best-in-class systems treat the LLM as the Control Plane (the orchestrator) and the execution environment as the Data Plane (the worker).

- The Orchestrator: Receives metadata and instructions to plan what to do.

- The Executor: Sits in a secure environment, potentially even within the customer's own cloud, and executes the code on the actual data.

- The Privacy Benefit: By designing the system this way, raw customer data never needs to travel to the LLM provider. The AI directs the work, but it doesn't see the vault.

2. From Synchronous Calls to Async Reliability

The Latency Trap: In a prototype, when you ask an agent to "analyze this file," the browser spins while the server crunches the numbers. In production, this is a disaster; complex data analysis takes time, and HTTP connections time out.

The Asynchronous Solution: Production systems must utilize a fully asynchronous architecture. When a user makes a request, it should be executed asynchronously, allowing the system to:

- Handle Scale: Process heavy workloads without blocking the user interface.

- Persist State (Agent Memory): If the user closes their laptop or the browser crashes, the agent keeps working. When the user returns, the state is preserved, and the results are waiting.

In this model, the "conversation" isn't just chat logs; it's a persistent computational state.

3. Safety First: Sandboxing and Guardrails

Sandboxing Infrastructure: Giving an AI the ability to write and execute code is powerful, but risky. You cannot run AI-generated code on your main servers. A robust Sandboxing Infrastructure is essential, ensuring every agent execution happens in an ephemeral, isolated environment. Ideally, the system should support various compute profiles, from small instances for quick scripts to GPU-powered nodes for heavy ML tasks.

Guardrails: Furthermore, one should never trust the LLM blindly. It is critical to implement Guardrails, a layer of analysis that reviews code for security and syntax before it ever hits the sandbox.

4. Agnosticism and Resilience

Model Agnostic Design: The AI landscape changes weekly, and tying a production product tightly to a single model or provider is a risk. Systems should be engineered to be Model Agnostic. This allows engineering teams to swap the underlying intelligence engine based on performance, cost, or specific capabilities.

Managing Rate Limits: This approach also aids in managing Rate Limits. By tuning context windows and monitoring usage at an infrastructure level, the agent remains responsive even during peak provider congestion.

5. Compliance as Code

Beyond Functionality: For enterprise adoption, "it works" isn't enough; it must be compliant. When dealing with high-stakes domains, infrastructure must be built from the ground up to support SOC2 and ISO controls.

Regulatory Standards: For specific industries, systems must integrate GxP compliance standards and workflows that enforce Human-in-the-Loop verification, a critical requirement for FDA-regulated environments. This cannot be an afterthought; it must be baked into the infrastructure as code (IaC) that deploys the solution.

Conclusion: Putting Principles into Practice

Building a demo agent requires good prompting. Building a production agent requires good engineering.

At Keiji AI, we realized early on that these distributed systems challenges were the key to unlocking enterprise value. By focusing on security, asynchronous processing, and robust system design, we've built an agent that doesn't just chat, it works and solves real-world problems.