November 17, 2025 · Jimeng Sun, PhD, CEO of Keiji AI

The Missing Pieces in AI Integration

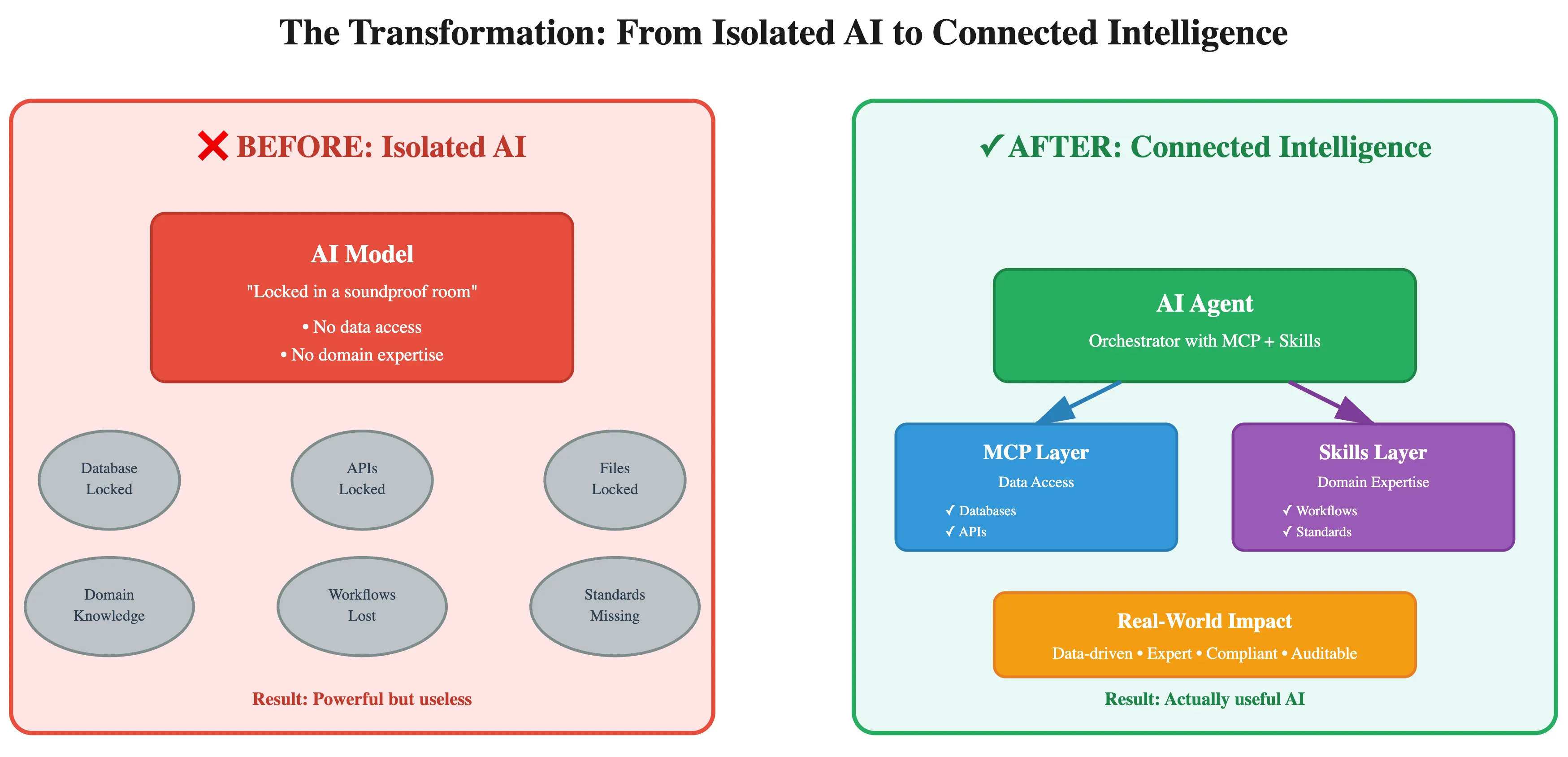

Imagine you're building the perfect AI assistant for your organization. You have cutting-edge language models that can reason, write, and analyze. But there's a problem: your AI is like a brilliant consultant locked in a soundproof room with no internet, no access to your company's databases, and no knowledge of your company's specific workflows.

This is the reality most organizations face when implementing AI. The models are powerful, but they're isolated islands — disconnected from the enterprise data and domain expertise that make them truly useful.

Enter two complementary innovations from Anthropic: Model Context Protocol (MCP) and Claude Skills. Together, they're transforming isolated AI models into connected, knowledgeable agents that can actually get work done.

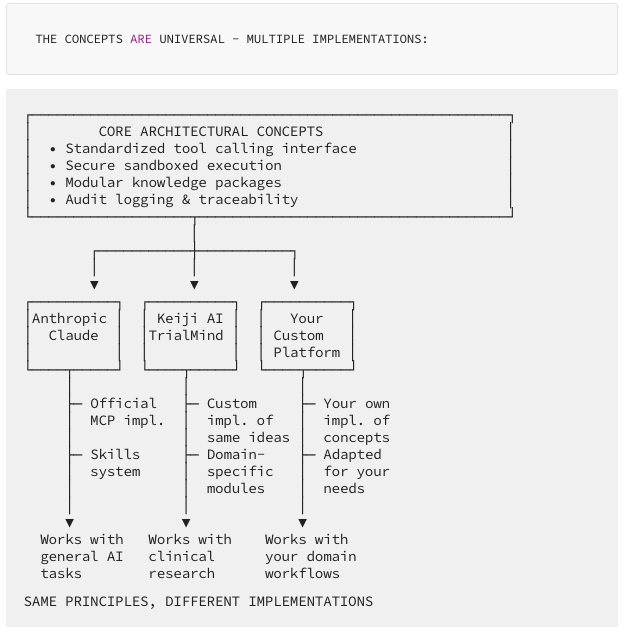

A Critical Clarification

While we'll discuss MCP and Skills through Anthropic's implementation, these are open architectural concepts, not proprietary software. MCP is an open standard that any AI system can adopt. The underlying principles — standardized data connectivity and modular knowledge packages — can be implemented in any AI agent pipeline, whether you're using OpenAI, open-source models, or building your own proprietary system. This article explores the ideas and patterns that make AI agents effective, not specific vendor solutions. For example, at Keiji AI, we implemented these concepts via our own MCP servers and Skills (secure sandbox, tool calling and guidance memory).

What is MCP? Your AI's Universal Data Port

Think of MCP as a USB-C port for AI applications. Just as USB-C created a universal standard for connecting devices, MCP creates a universal standard for connecting AI to data sources.

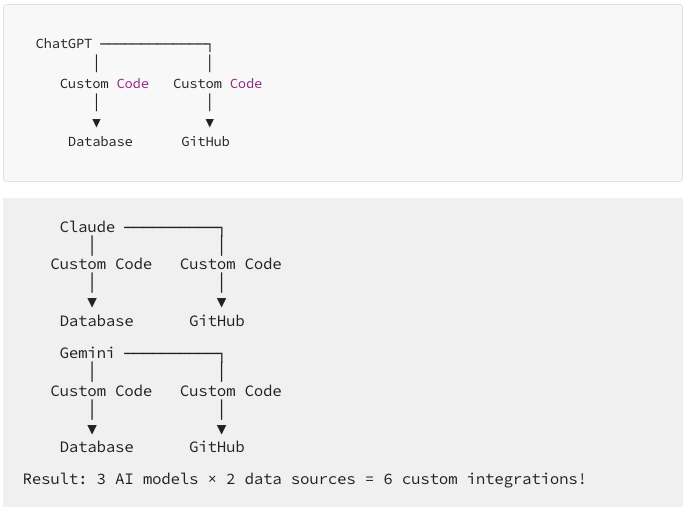

Before MCP, every time you wanted to connect your AI to a new data source — whether that's your company's database, GitHub repositories, or a CRM system — you needed custom code. A separate integration for each connection. It was like needing a different cable for every device you own.

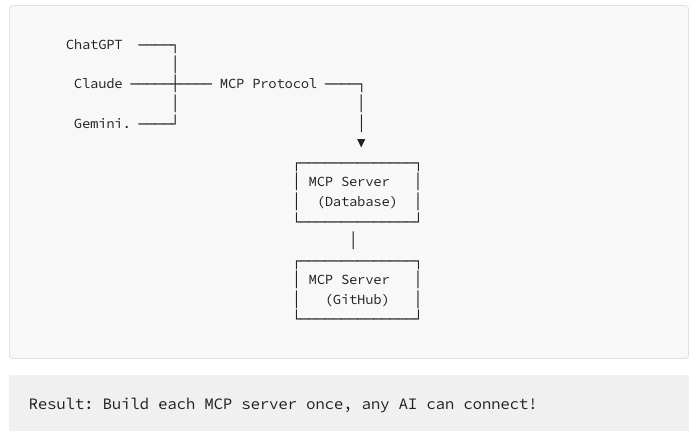

MCP changes this by providing a standardized protocol. Build one MCP server for your data source, and any MCP-compatible AI application can connect to it. It's a shift from "N×M problem" (every AI needing integration with every data source) to a "plug-and-play" ecosystem.

The Three Building Blocks

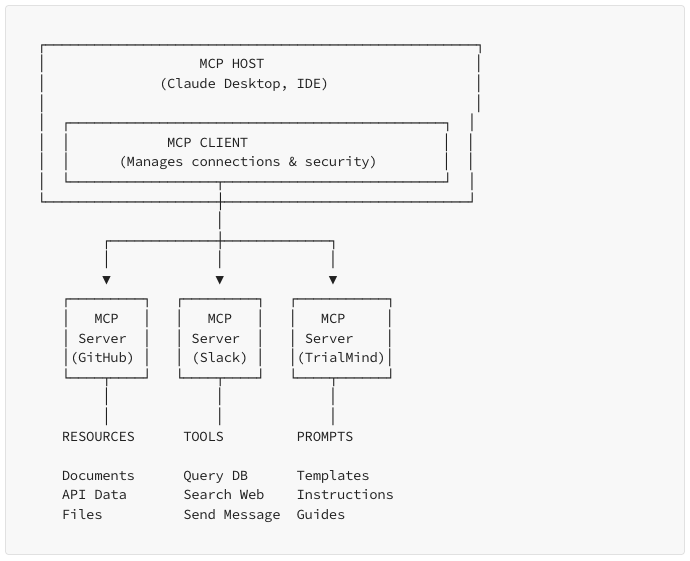

MCP defines three core concepts that make this work:

- Resources are the data itself — documents, database records, API responses. Think of these as the content your AI can read from.

- Tools are actions your AI can take — querying a database, searching the web, updating a record. These are the verbs in your AI's vocabulary.

- Prompts are pre-configured instructions that guide how your AI interacts with these resources and tools.

Why This Matters

The power of MCP lies in its standardization. When a company builds an MCP server for their proprietary system, it works not just with Claude, but with any AI application that supports MCP. OpenAI, Google DeepMind, and major development tools like VS Code and Replit are all adopting it.

More importantly, MCP includes built-in logging and audit trails — critical for regulated industries where every AI decision needs to be traceable.

What are Claude Skills? Teaching AI Your Way of Working

If MCP is about connecting to data, Skills are about teaching expertise. They're modular packages that encode your organization's specific knowledge, workflows, and best practices.

Here's the key insight: having access to data isn't enough. Your AI also needs to know how to use that data according to your workflows.

Imagine you're onboarding a new clinical research associate to run a clinical trial. You don't just give them access to all your systems and hope for the best. You teach them your methodology — how to structure reports, which metrics matter, what quality standards to meet, and the step-by-step process for different types of analysis.

Skills do the same for AI.

How Skills Work

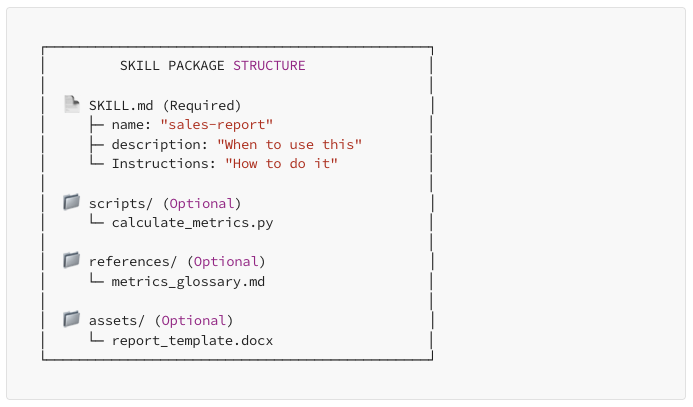

A Skill is a simple package containing:

- Instructions written in plain Markdown that explain the workflow

- Scripts for repetitive or complex calculations

- Templates for consistent output formatting

- References for domain-specific knowledge

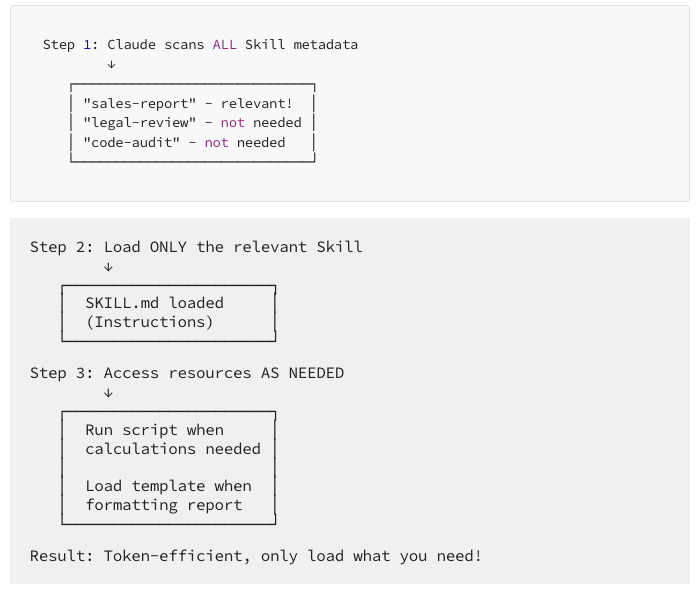

The genius is in the progressive disclosure:

Claude doesn't load every Skill into memory all the time. Instead, it scans Skill descriptions, identifies which ones are relevant to your current task, and loads only what's needed. This keeps things token-efficient while making expertise available on demand.

Real-World Impact

A marketing team might create a "competitive-analysis" Skill that defines their exact methodology for evaluating competitors. A legal team might build a "contract-review" Skill that encodes their checklist and risk assessment framework. A data science team might create a "model-validation" Skill that ensures consistent statistical practices.

Once created, these Skills can be shared across the entire organization, ensuring everyone — or rather, every AI interaction — follows the same standards.

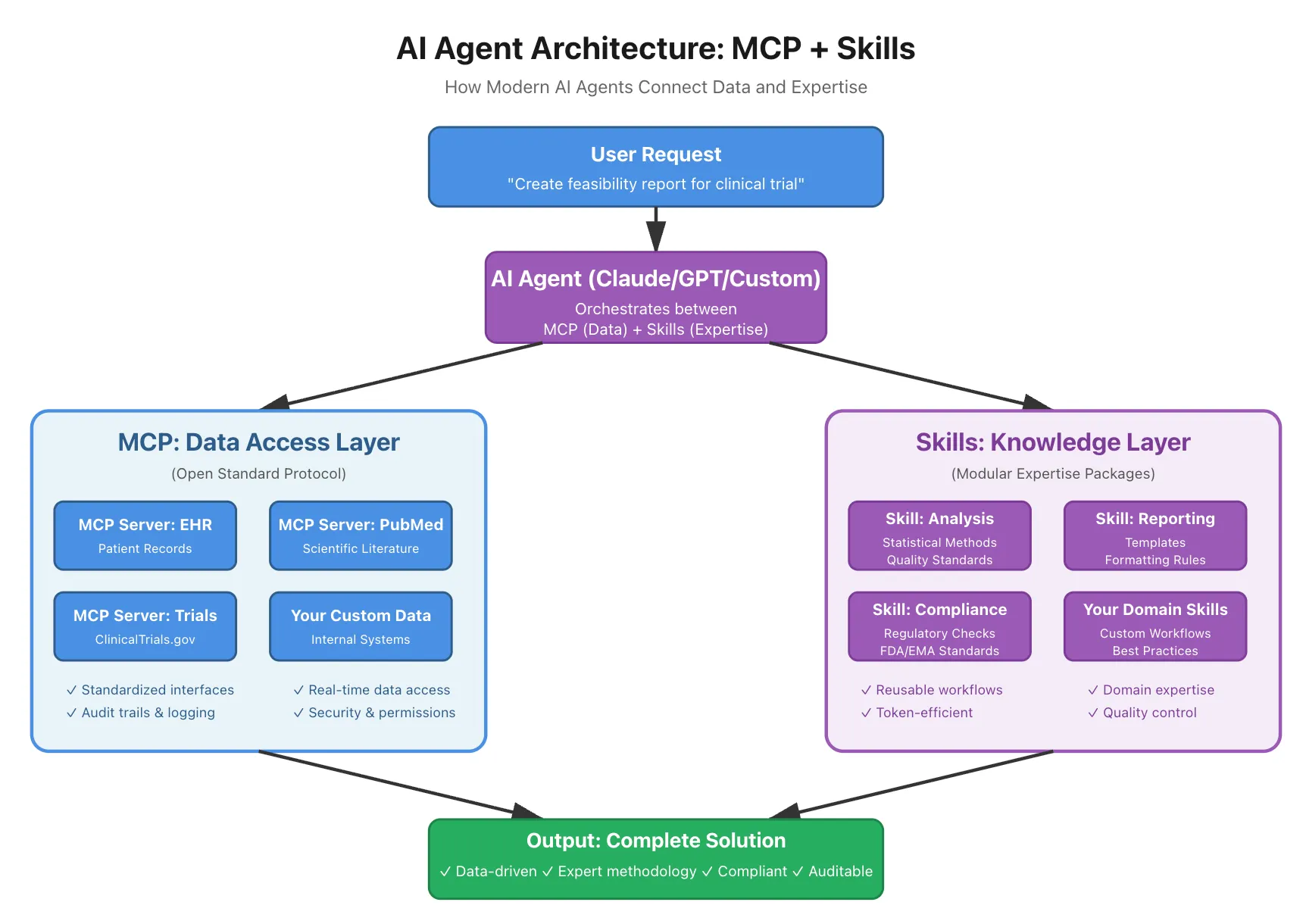

The Real Power: MCP + Skills Together

Here's where it gets interesting. MCP and Skills aren't alternatives — they're designed to work together. Next, we will show some examples of how TrialMind (an AI agent from Keiji AI) utilizes these two concepts (MCP + Skills) for supporting clinical research and running clinical trials.

Let me illustrate with a real-world example that's transforming one of the most regulated, high-stakes industries: pharmaceutical research.

Case Study: TrialMind and Clinical Research

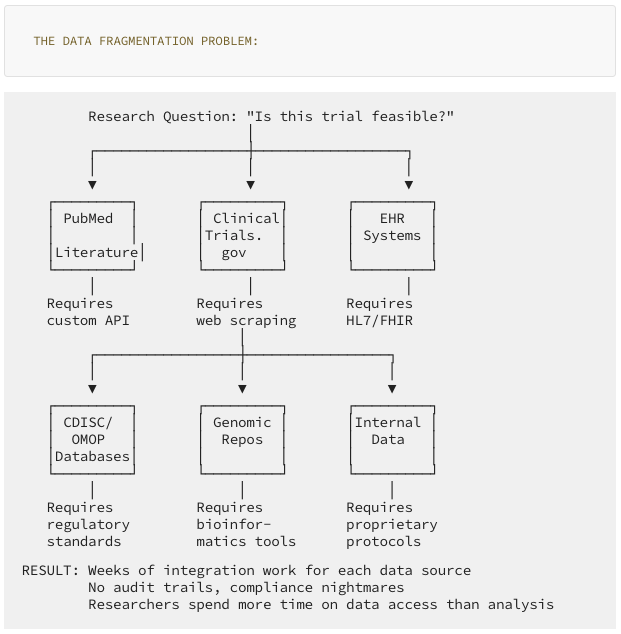

Pharmaceutical companies face a brutal reality: they spend over $50 billion annually on clinical trials, yet 90% of those trials fail. A major reason is the fragmentation of data. Critical information is scattered across:

- Scientific literature databases like PubMed

- Trial registries like ClinicalTrials.gov

- Electronic Health Records at thousands of hospitals

- Regulatory databases using standards like CDISC and OMOP

- Genomic repositories

- Internal experimental data

Traditionally, accessing each system required custom code, specialized query languages, and weeks of integration work. More importantly, in healthcare, every query must be logged for regulatory compliance. The FDA and EMA demand complete traceability.

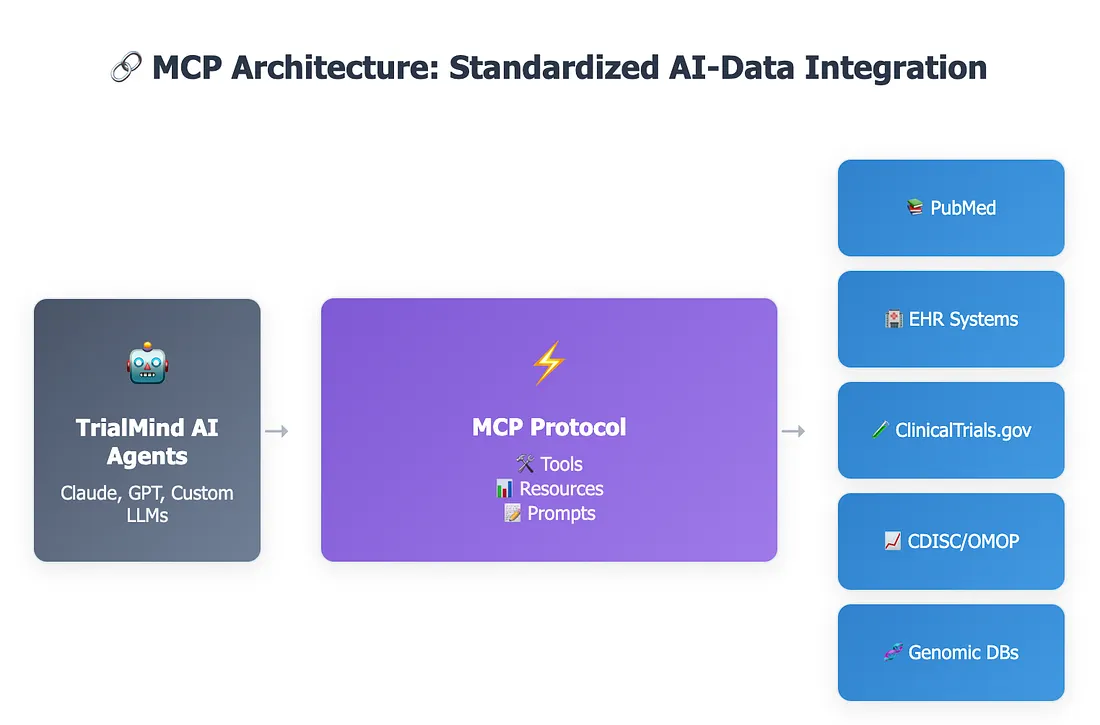

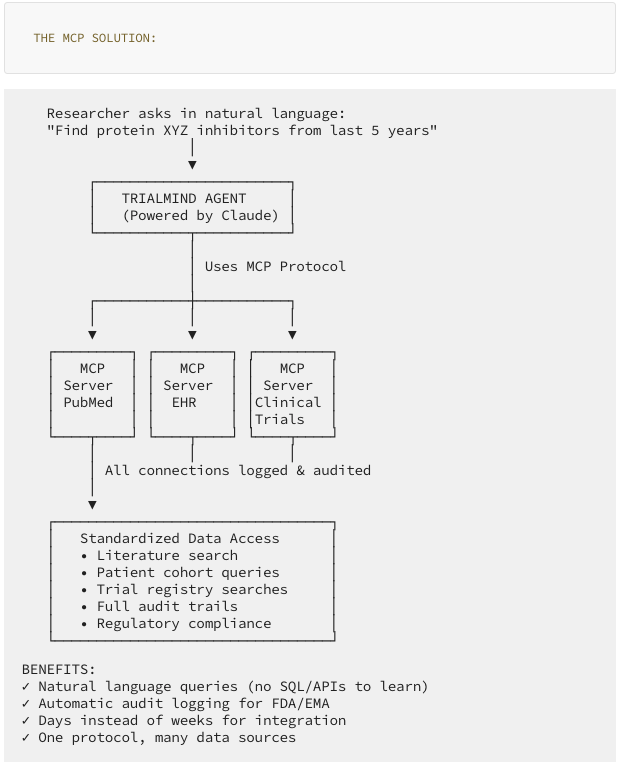

Enter TrialMind

TrialMind, developed by Keiji AI, uses MCP and Skills to transform clinical research workflows.

An Important Note on Implementation: While we've been discussing MCP and Skills in the context of Anthropic's Claude, it's crucial to understand that these are architectural concepts and design patterns, not proprietary software locked to a single vendor. MCP is an open standard that can be implemented in any AI agent pipeline. The core ideas — secure sandboxed environments, standardized tool calling, and modular knowledge packages — are universal principles for building robust AI agent workflows.

At Keiji AI, we implemented our own TrialMind AI agent platform using these same fundamental concepts (secure sandbox + tool calling + modular expertise) specifically optimized for clinical research and clinical trial applications. You don't need to use Claude or Anthropic's specific implementations. Whether you're building with OpenAI, open-source models, or proprietary systems, the architectural patterns of separating data connectivity from domain expertise remain powerful and applicable.

This is about how to think about AI agent architecture, not about which vendor to choose.

The MCP Layer: TrialMind built their own implementation of the MCP concepts — specialized servers and tool-calling infrastructure for each clinical data source. They created a PubMed integration for literature searches, an EHR connector for patient data, and interfaces with clinical trial registries. While inspired by the MCP standard, this is Keiji AI's own infrastructure optimized for the unique security, compliance, and performance requirements of clinical research.

The key innovation isn't which specific software they used, but how they architected the system: standardized tool interfaces, secure sandboxed execution, and comprehensive audit logging — the core principles that make MCP powerful.

Here's what this enables:

A researcher can simply ask: "Find inhibitors of protein XYZ reported in the last 5 years"

Behind the scenes, Claude invokes the PubMed MCP server, which handles all the complexity of querying the database with the right API calls and data formats. The researcher gets current, cited results without visiting any websites or learning query syntax.

Or consider: "How many patients in our EHR system meet the exclusion criteria for Trial XYZ?"

The EHR MCP server queries the clinical database, applies the criteria, and returns patient cohorts with recruitment estimates and visualizations. All while automatically logging every query for FDA compliance.

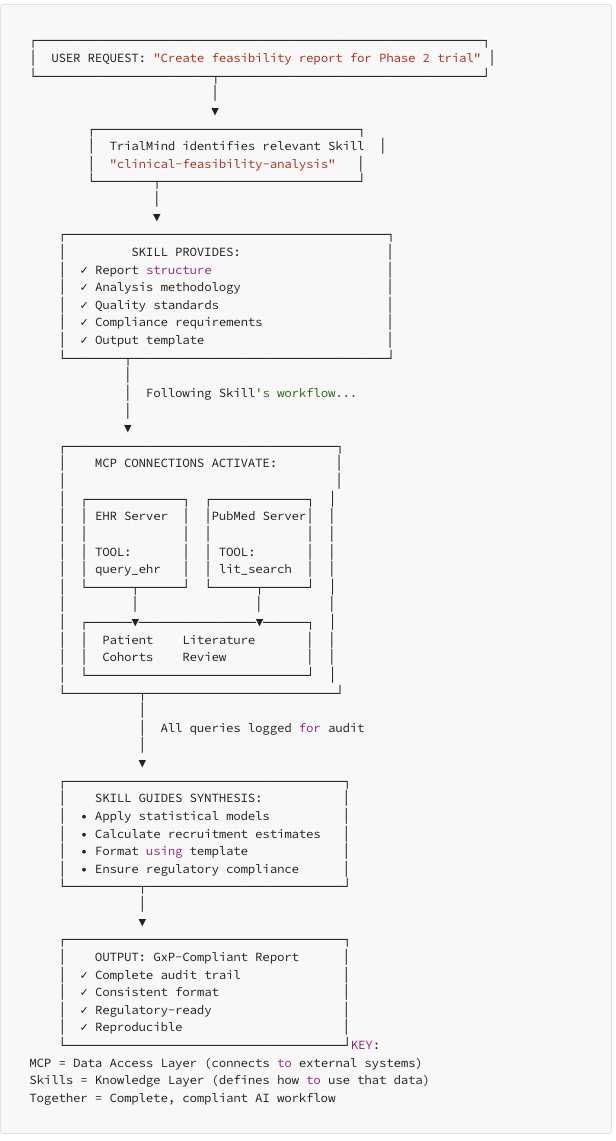

The Skills Layer: But data access alone isn't enough. TrialMind also created Skills that encode validated research methodologies.

A "clinical-feasibility-analysis" Skill defines the exact workflow for evaluating whether a trial is viable:

- Review protocol inclusion/exclusion criteria

- Query patient populations via MCP

- Analyze competitive landscape through trial registries

- Review current literature for treatment standards

- Generate a report using regulatory-approved templates

- Ensure all data access is logged for audit trails

The Skill provides the methodology. The MCP connections provide the data. Together, they enable something remarkable.

The Transformation

A clinical researcher can now ask in plain language: "Create a feasibility report for our Phase 2 oncology trial"

The system:

- Loads the appropriate Skill (defines what a good feasibility report looks like)

- Activates relevant MCP connections (accesses real patient data, literature, trial registries)

- Follows the Skill's workflow (applies the right analytical framework)

- Generates a report that's regulatory-ready with full audit trails

What previously took weeks of custom queries, manual data gathering, and Excel manipulation now happens in minutes — with better compliance than manual processes.

Why This Matters for Regulated Industries

The TrialMind example reveals why the MCP + Skills combination is particularly powerful for healthcare, finance, legal, and other regulated sectors:

- Auditability: Every MCP tool call is automatically logged. Regulatory reviewers can trace every piece of data used in a decision back to its source.

- Reproducibility: The combination of logged data access and documented workflows means analyses can be exactly reproduced — critical for regulatory submissions.

- Compliance by Design: Rather than hoping users follow procedures, the procedures are encoded into Skills that guide every interaction. We can also separate code generation (AI layer) from code execution (secure data layer).

- Speed Without Chaos: Integration that used to take weeks now takes days, but with more control and oversight than custom integrations typically provided.

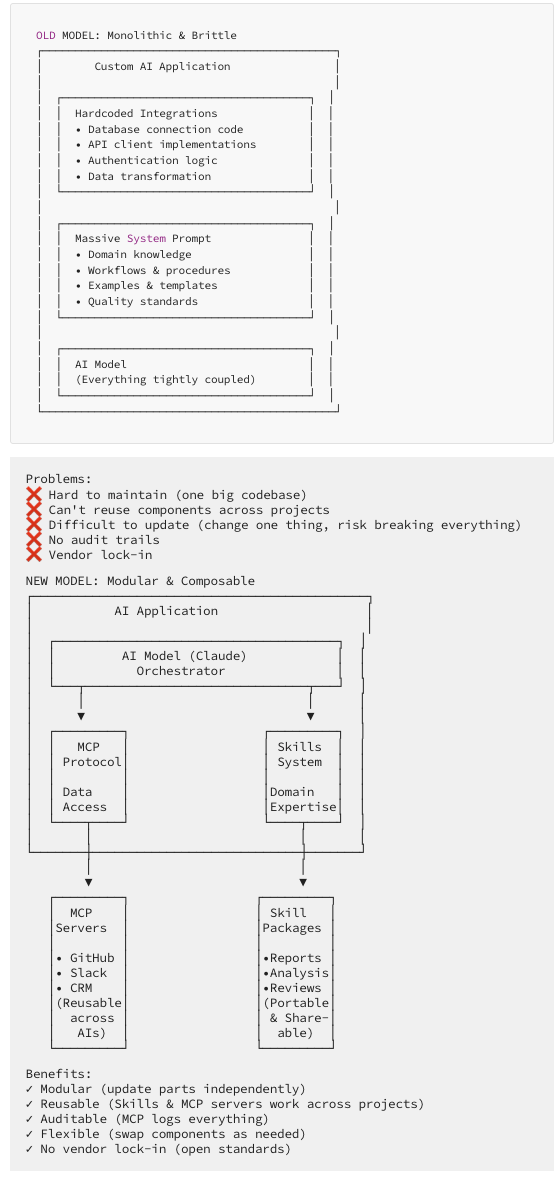

The Bigger Picture: A New Architecture for AI

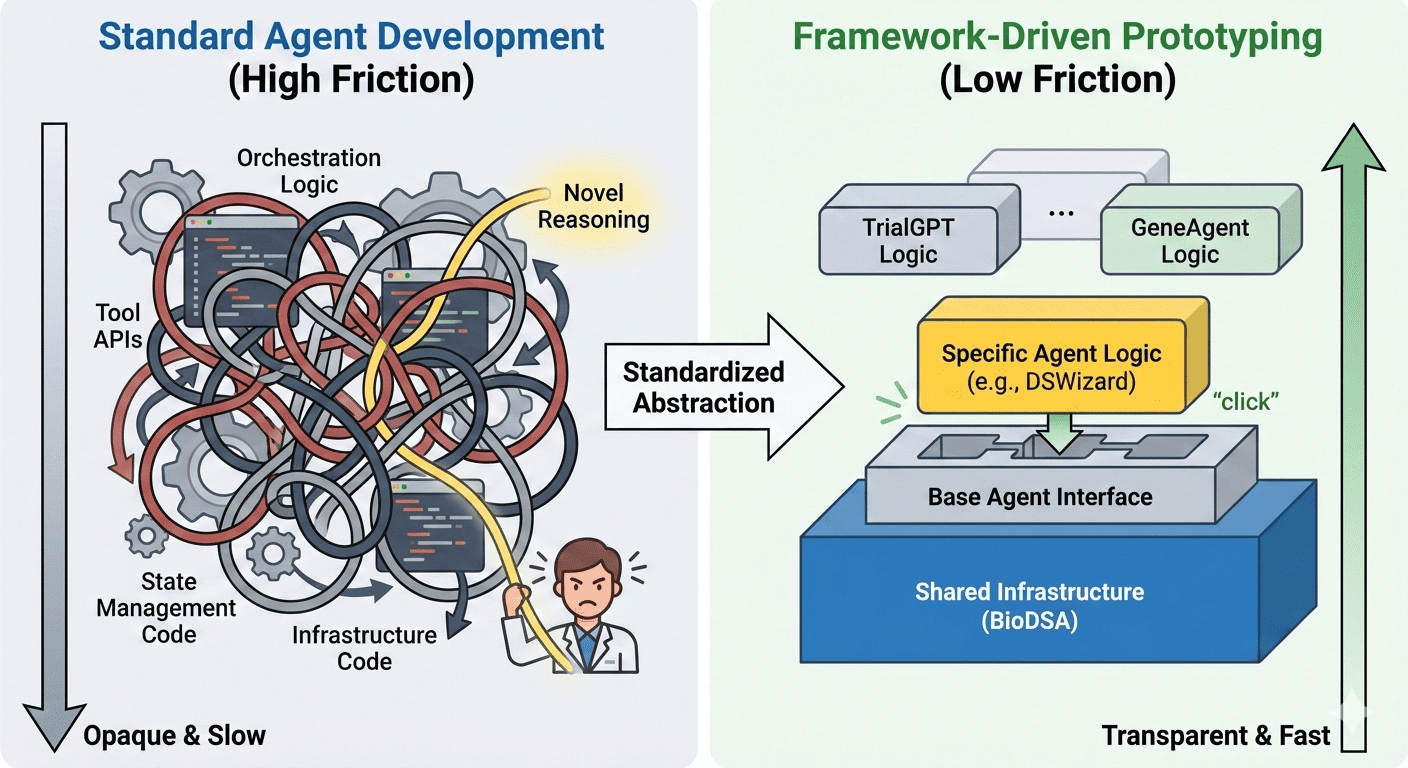

What MCP and Skills represent is a fundamental shift in how we think about AI integration.

The Old Model was monolithic: build a custom AI application with hard-coded integrations and hope your prompt engineering captures all the domain knowledge.

The New Model is modular and composable:

- MCP provides standardized data connectivity

- Skills provide portable domain expertise

- AI models orchestrate between them

This architecture solves several critical problems:

- The Integration Problem: Instead of N×M custom integrations (every AI with every data source), you build once using MCP standards.

- The Knowledge Problem: Instead of embedding expertise in impossible-to-maintain prompts, you encode it in shareable, versionable Skills.

- The Compliance Problem: Instead of hoping AI behaves correctly, you build guardrails into the architecture itself through logged MCP calls and validated Skills.

- The Scaling Problem: Adding new data sources or capabilities becomes dramatically easier when you're working with standards rather than custom code.

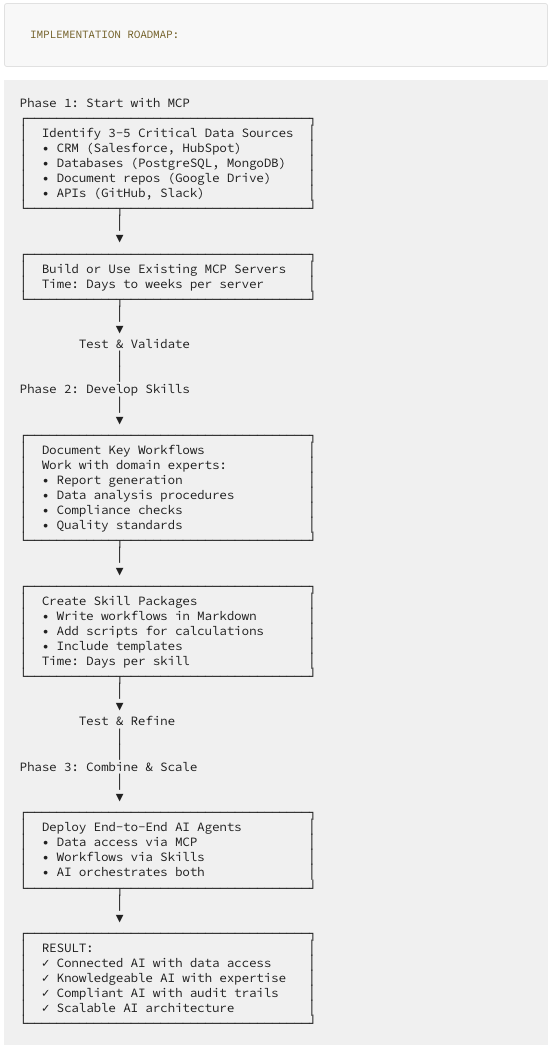

What This Means for Your Organization

If you're considering AI implementation, the MCP + Skills framework offers a practical path forward:

Start with MCP for your critical data sources. Identify the 3–5 systems your AI must access — whether that's your CRM, databases, document repositories, or external APIs — and build or use existing MCP servers for them.

Develop Skills for your key workflows. Work with domain experts to document their methodologies in Skills. A good first project is often report generation or data analysis where workflows are established but time-consuming.

Combine them for end-to-end automation. Once you have both data access and encoded expertise, you can build AI agents that truly understand your business.

Choose Your Implementation Path:

- Use existing standards: Adopt Anthropic's MCP and Skills implementations if they fit your needs

- Build custom solutions: Like Keiji AI, implement these architectural patterns in your own infrastructure optimized for your domain

- Hybrid approach: Use standard MCP for common integrations, custom implementations for specialized needs

The power lies in the architecture, not the specific implementation. The best part? Because MCP is an open standard and the modular patterns are universal, you're not locked into any single vendor. Build once, benefit across your entire AI stack, regardless of which LLMs or platforms you choose to use.

The Road Ahead

We're in the early days, but adoption is accelerating. Thousands of MCP servers exist, and major AI companies are adopting these patterns. Industries from healthcare to finance are building their own implementations.

The key insight: These aren't proprietary features — they're emerging as architectural standards for AI agent design. Whether you call it "MCP" or build your own protocol, standardized tool calling with secure sandboxes is becoming universal. Whether you call them "Skills" or "modules" or "guidance," separating domain expertise from prompts is proving essential.

Companies like Keiji AI with TrialMind prove you can implement these principles in your own systems, optimized for your domain, without vendor lock-in.

The promise: AI that's connected, knowledgeable, compliant, and actually useful for your organization's specific work. That's not a distant future — it's happening now.

Key Takeaways

- MCP is an open standard for standardized data connectivity — a protocol concept, not proprietary software

- Skills represent the pattern of modular, domain-specific expertise packages — an architectural approach applicable to any AI system

- Together, these concepts enable AI that's both connected to data and knows how to use it properly

- Implementation flexibility: TrialMind demonstrates these principles can be implemented in custom platforms optimized for specific domains (clinical research, in their case)

- Core concepts are universal: Secure sandboxes + tool calling + modular knowledge = effective AI agent architecture

- Critical for compliance: Built-in audit trails and validated workflows make AI suitable for regulated industries

- The future: Not about specific vendors, but about architectural patterns for building capable, trustworthy AI agents

The Bottom Line: Whether you use Anthropic's implementation, build your own like Keiji AI did, or use another provider's tools, the architectural principles of separating data access from domain expertise are what make AI agents truly effective in production environments.

Further Reading

- Model Context Protocol: https://modelcontextprotocol.io/

- Claude Skills Documentation: https://www.claude.com/blog/skills-explained

- Anthropic Courses: https://anthropic.skilljar.com/